Overview of HA Architecture

When implementing an active/active highly available environment for Console, you can deploy two different types of architecture.

Both architectures implement a load balancer that monitors the health of each Console node and redirects the traffic accordingly, balancing the load between all healthy nodes.

When the load balancer detects that an Shares node is unreachable, it automatically stops redirecting traffic to the unavailable node, and redirects all traffic to the remaining healthy nodes.

Once the faulty node can be reached, the load balancer automatically detects the presence of the new healthy node and includes it in the traffic-sharing function. The nodes share the load related to the web traffic and fasp-based transfers, utilizing all available servers.

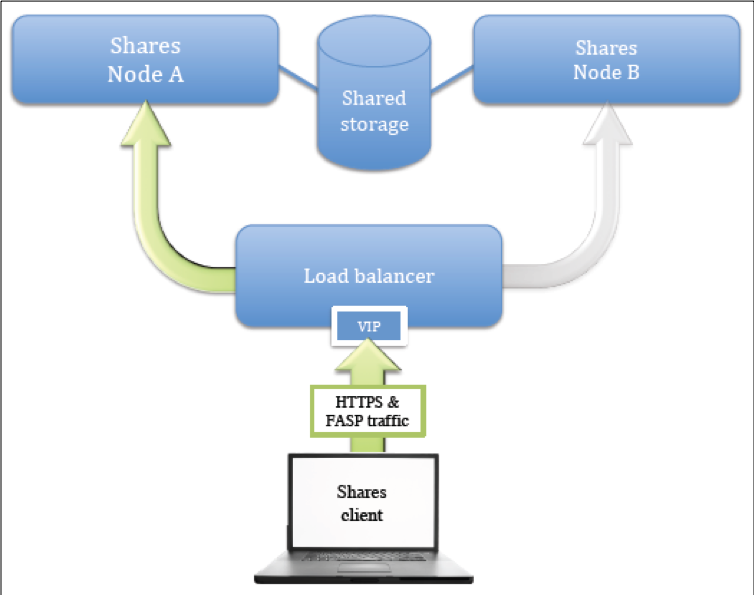

Architecture Type 1: Redirect All Traffic

One form of load-balancing architecture provisions the load balancer with a virtual IP address (VIP) for user access; the load balancer then manages all the traffic related to the Console service: the web requests (HTTPS/TCP traffic) as well as the FASP transfers (SSH/TCP and FASP/UDP traffic). A fully qualified domain name (FQDN) ―typically shares.mydomain.com―is used to access the Shares service and points to the VIP of the load balancer.

In this architecture, both Shares nodes can use private IP addresses. Only the VIP requires a public IP address, because it will be used by the clients to connect to the Shares service components.

Because the fasp transfers represent most of the total traffic generated by the Shares service, the load balancer must be powerful enough to handle the associated load. In some environments, this could mean a total bandwidth of up to several gigabits per second.

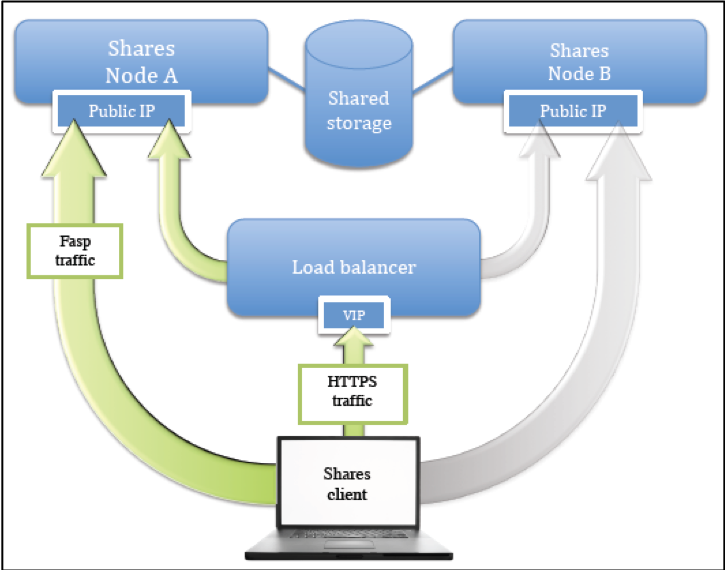

Architecture Type 2: Load Balancer Redirects Web Traffic Only

An alternative architecture requires the load balancer to handle the web traffic only. In most respects, the architecture for this environment is like the first model―it uses a load balancer with a virtual IP address (VIP), plus a FQDN that points to the VIP to let clients access the web application. However, in this architecture, the load balancer is used for redirecting web traffic only.

The traffic related to the FASP-based transfers takes place directly between the clients and the transfer services running on both nodes. In order to balance and fail-over the traffic in the event that the node is unavailable, Shares uses another FQDN (typically shares.mydomain.com) which is resolved into a list containing the public IP addresses that point to the different nodes. The DNS in charge of resolving that domain name must provide a round-robin-type list, with the list entries presented in a different order every time a response to a new DNS query is sent. In this way, successive queries coming from different clients will see a different IP address on the top of the list. Because the High Speed Transfer Server clients only use the IP address at the top of the list to contact the transfer server (and this IP address is different each time), multiple clients connect to different transfer servers (nodes A and B).

Whenever a client is unable to connect successfully to a transfer server (because it is unavailable), it continues to resolve the FQDN and to make attempts to contact the IP address at the top of the new list. When the top IP address points to a healthy node, the client performs a successful transfer.This process typically takes less than a minute. In order to keep the fail-over delay as short as possible, the Time-To-Live (TTL) value of the round-robin FQDN list must be kept as short as possible on the DNS server.

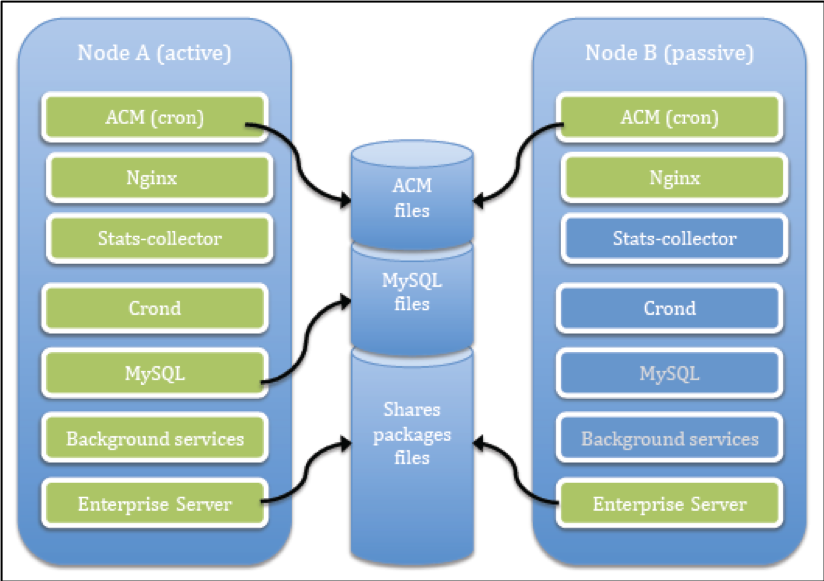

Shares Services Stack

Regardless of which architecture is deployed, both Console nodes are considered active because clients can contact any of them to access the web application portion or the transfer server portion. Nevertheless, not all of the IBM Aspera services run at the same time on both machines.

While some services are considered active/active and do run on both nodes, other services are considered active/passive and only run on one of the two nodes. The node that runs all the services is called the active node, and the node that only runs the active/passive services is called the passive node.

In the diagram above, the mysql, crond, stats-collector, and shares-background service runs only on the active node. While both nodes can access the ACM files and the Shares packages simultaneously (read-write mode), the MySQL data files are accessed at a specific time by a single instance of the MySQL service running on the active node.

The following table lists each service and its location:

| Service Name | Type | Location |

|---|---|---|

| nginx | active/active | Runs on both nodes |

| mysqld | active/passive | Runs on the active node only |

| crond | active/active | Runs on the active node only |

| stats-collector | active/passive | Runs on the active node only |

| shares-background | active/passive | Runs on the active node only |

| asperahttpd | active/active | Runs on both nodes |

| asperanoded | active/active | Runs on both nodes |